AI in Education: Heeding Ian Malcolm’s Warning from "Jurassic Park"

For those of you who have been around long enough to recall the summer of 1993, like me, many of you enjoyed the arrival of the box office smash hit "Jurassic Park." A key line in that movie rings true today, perhaps even more ominously than it did amid a Hollywood plot. Mathematician Ian Malcolm (Jeff Goldblum) is grappling with the reality of men and dinosaurs suddenly uniting in the present day, and he wisely cautions the park’s owner: “Your scientists were so preoccupied with whether or not they could that they didn't stop to think if they should.”

While it doesn’t threaten to eat us for lunch (yet), our society faces a sudden, very real, unexpectedly potent technological leap that could be a T. rex-sized problem if we don’t heed Malcom’s warning: Artificial Intelligence (AI).

Defining AI

While this could fill a volume on its own, before we go further, allow me to describe AI in a basic sense. AI encompasses machine learning, algorithms, and natural language processing. It is remarkably adept at simulating intelligent behavior and mimicking the dialogue of human beings. You’ve no doubt heard by now of platforms like ChatGPT, Bing CoPilot, and many more that can produce answers, content, and even original artwork in a matter of seconds—where similar creative processes would typically take even highly-trained subject matter experts far longer. Within the educational sphere, AI manifests itself in personalized learning platforms, automated assessment systems, facial recognition tools, and, indeed, as a weapon to combat plagiarism.

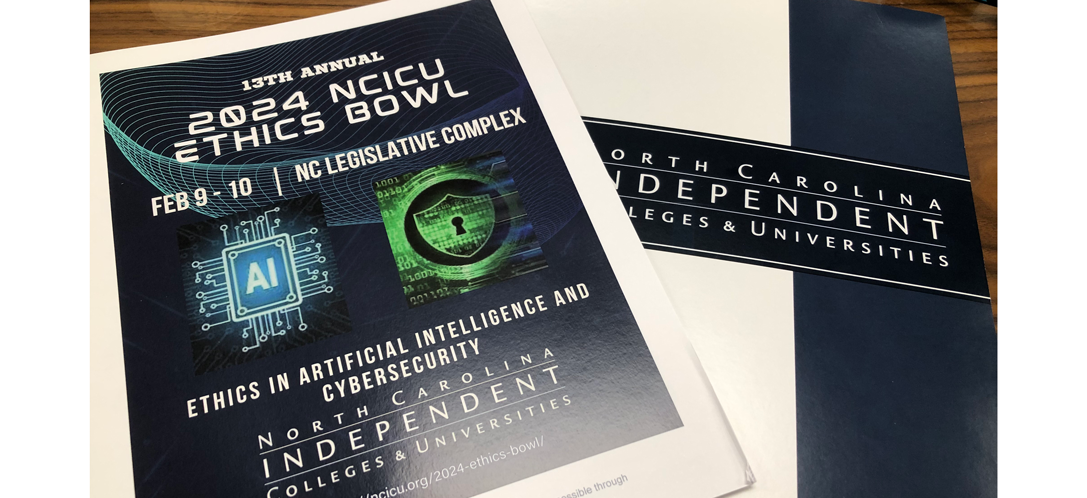

Fortunately, our nation’s higher education institutions are keeping a watchful eye on AI and taking stock of both its powers and threats. Earlier this year, on behalf of BHDP Architecture, I attended the annual NCICU Ethics Bowl Competition in Raleigh. This year, the “ripped from the headlines” cases students were asked to debate focused on the ethical dilemmas presented by AI and cybersecurity technology that demand our attention. As I listened to thoughtful arguments for both sides of a given dilemma from a diverse group of undergraduate students, they considered:

- The ethical responsibility (or not) of a technology company to decrypt a person’s private device for a government investigation.

- Cybersecurity breaches and public disclosure/reporting obligations.

- Using AI to create fine art and other creative works.

However, the question that rose above all of these was whether it is ethical to use ChatGPT (or similar) in academic settings—for research, course material creation, examinations, writing, and more.

The NCICU Ethics Bowl challenges students to explore ethical questions about vital societal issues.

The Benefits of AI in Academia

On the one hand, AI in higher education promises several benefits. Learning can become more personalized, where educational content can be tailored to individual students’ needs. AI’s algorithms analyze student performance and adjust the curriculum accordingly to personalize (and improve) comprehension and material engagement. Grading has long been regarded as a time-consuming endeavor by educators. AI, conversely, offers the opportunity to streamline this process, providing instant assessment feedback to students. This, in turn, frees instructors to focus their efforts more on interactions with the students. Finally, regarding predictive analytics, AI can predict student outcomes and identify at-risk students who would benefit from early intervention.

The Ethical Concerns of AI Integration

With those benefits, however, ethical concerns abound. The arguments raised are too numerous to cover adequately here, but a few institutions are considering privacy, bias/fairness, transparency, and human displacement. Regarding privacy, we know that AI systems collect vast amounts of student data. The question becomes, how do we balance personalized learning with safeguarding a student’s privacy? With a renewed focus in recent years on inherent bias in society and within educational institutions, we must also understand that as AI algorithms learn from historical data, those inherent biases will come along for the ride. If said biases get infused into educational-based AI, that could perpetuate inequalities.

Transparency is equally essential when educating and assessing students. In direct conflict with this, many current AI systems often lack transparency. In contrast, students deserve to understand how algorithms arrive at conclusions—this makes the presence of explainable AI models crucial. And finally, as we see the potential for human displacement already in the labor force, higher education must be concerned with this reality, too. As AI automates more tasks, what does that mean for educators in various roles? How do we balance efficiency and human thought, touch, input, etc.?

Navigating the Future of AI in Higher Education

In a recent issue of Campbell Magazine (Vol. 18, Issue No. 1), the institution’s president, Dr. J. Bradley Creed, posed the question, “Are AI and ChatGPT allies—or antagonists—in our quest for human flourishing, seeking the common good and living purposeful lives?” As our society, and higher education in particular, continues navigating the intersection of AI with our daily work, studies, and lives, I can’t help but recognize the immense potential it offers. For colleges and universities, carefully leveraging AI’s tools offers tremendous potential to help institutions make more informed decisions about their physical buildings and space planning. For instance, predictive analytics can forecast space needs based on historical data (enrollment, schedules, space utilization, facility occupancy, etc.) and wider trends across peer institutions. From a sustainability standpoint, energy use can be optimized by using AI to analyze patterns of use and climate and adjusting systems accordingly. As deferred maintenance is a common challenge across many campuses, predictive maintenance algorithms can help identify when a given building’s systems are likely to fail or need service, allowing for timely intervention and avoiding downtime.

These and other uses of AI offer institutions a tremendous opportunity to enhance their offerings—both the classroom itself and what’s taught within it. However, as Malcolm in "Jurassic Park" warned us, we must tread carefully when facing powerful technologies we may not fully understand, ensuring ethical considerations guide our decisions. We can harness AI's benefits while protecting educational values by fostering awareness, transparency, and responsible use—beginning in our education institutions and extending into the workforce.

Let’s get to work. Complete our contact form today for a free consultation.

Author

Content Type

Date

July 15, 2024

Market

Practice

Topic

Technology

Innovation